The Struggle

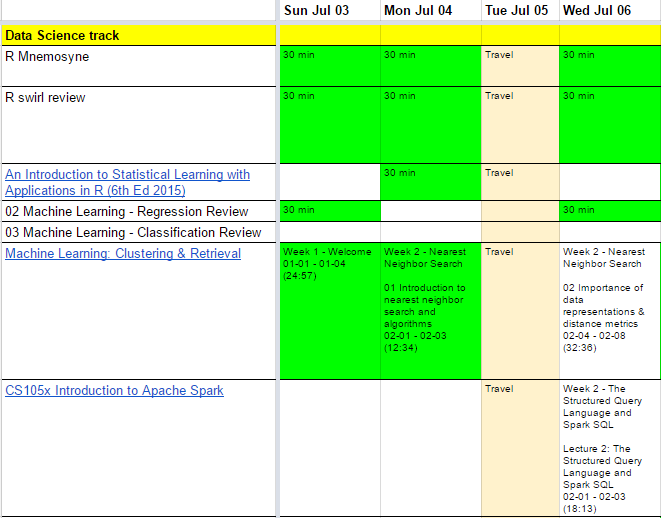

In my last blog, I shared how I take notes while engaged in an MOOC for Data Science. I proceeded happily for months in this fashion, ringing each bell as I completed course after course in various specializations.

Around February of this year, however, a sinking feeling was starting to settle in: I just wasn’t retaining a lot of the information I was learning. Sure I was scoring 100’s on all the quizzes and completing the assignments on-time without any issues, but I felt uneasy.

Around February of this year, however, a sinking feeling was starting to settle in: I just wasn’t retaining a lot of the information I was learning. Sure I was scoring 100’s on all the quizzes and completing the assignments on-time without any issues, but I felt uneasy.

A month after I worked on the code for a gradient descent algorithm for a lab assignment, you think I had any clue what gradient descent was?

Two months after I learned how to create a support vector machine model in python, you think I recalled what library to import to even start?

Three months after I learned to separate data into training & test groups in R, you think I could remember a single command to do so?

NO – I found myself constantly having to go back to older notes for the most basic commands. I was spending all of my time on StackOverflow looking for solutions to the most basic questions (like how to reverse a Python list, how to come up with 10 random integers in R, etc). If I was to seriously work in the Data Science realm, I knew I needed to have a solid, fundamental level of proficiency with the tools and techniques I was expecting to use.

Enter Mnemosyne

Quite a while ago, I had gotten it into my mind to learn Japanese. My sole motivation: in my academic career the only thing I absolutely sucked at was foreign languages and it wasn’t for lack of effort. In my 30’s, I wanted to wipe that blemish off my record by tackling one of the hardest languages for native English-speakers pursue.

I ran through all 3 levels of Rosetta Stone. I listened to every minute of Pimsleur’s entire Japanese collection. I had more books & videos than I knew what to do with. The most valuable tool I used, however, was the open-source flashcard system, Mnemosyne.

I ran through all 3 levels of Rosetta Stone. I listened to every minute of Pimsleur’s entire Japanese collection. I had more books & videos than I knew what to do with. The most valuable tool I used, however, was the open-source flashcard system, Mnemosyne.

I confess, I didn’t try a different flashcard programs and settle upon this one as the best. But what I did want was a tool to help me identify the concepts I was struggling with and beat me over the head with them until they became second-nature.

From their website:

Mnemosyne uses a sophisticated algorithm to schedule the best time for a card to come up for review. Difficult cards that you tend to forget quickly will be scheduled more often, while Mnemosyne won’t waste your time on things you remember well.